- All Plans

- Yahoo Press Release

- Bloomberg Press Release + Yahoo Finance

- Business Insider Press Release

- Benzinga Press Release

- Digital Journal Press Release

- US Times Now Press Release

- AP News Press Release

- Yahoo Finance Press Release

- Street Insider Press Release

- MSN News Press Release

- USA Today Press Release

Agibot Groundbreaking Release – New Perspectives on Task Embodiment and Expert Data Diversity

China, 17th Oct 2025 — A joint research team from Agibot, Chuangzhi Academy, and The University of Hong Kong has published a breakthrough study on data diversity in robot manipulation learning. The research explores three major dimensions: task diversity, robot embodiment diversity, and expert diversity. It challenges the long-held belief that “more diverse data is always better,” offering fresh insights for building scalable robot operating systems.

Specialist vs. Generalist Training

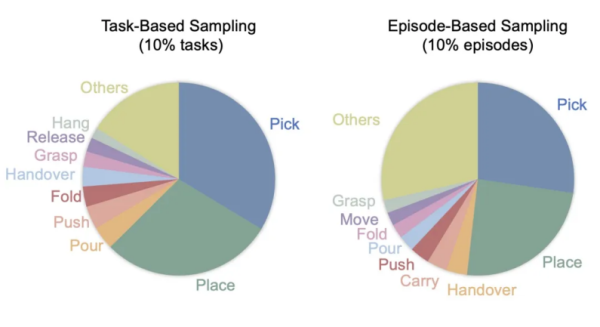

Researchers have long debated whether robot models should focus on task-specific “specialist” training or broad “generalist” learning. To investigate, the team designed a clever comparative experiment using two equal-sized pre-training datasets based on the Agibot World dataset.

- The Specialist Dataset focused on 10% of tasks most relevant to the targets, emphasizing five atomic skills: pick, place, grasp, pour, and fold. This approach narrowed skill diversity but concentrated on what mattered most.

- The Generalist Dataset randomly sampled 10% of trajectories from each task, preserving full task diversity. Although fewer trajectories related directly to target skills (59.2% vs. 71.1%), the dataset maintained balance across all skills.

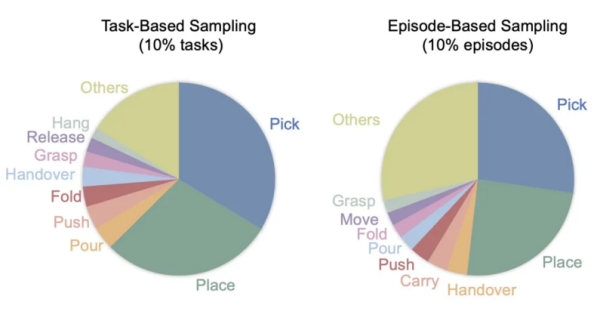

The results were striking. The Generalist approach outperformed the Specialist one across four challenging tasks, showing an average improvement of 27%. On complex tasks like Make Sandwich and Pour Water, the gains reached 39% and 70% respectively.

Why did diversity win? The broader sampling captured richer scenes, object variations, and lighting conditions. This unintentional diversity strengthened the model’s adaptability and improved generalization across environments.

Scaling Laws in Robot Learning

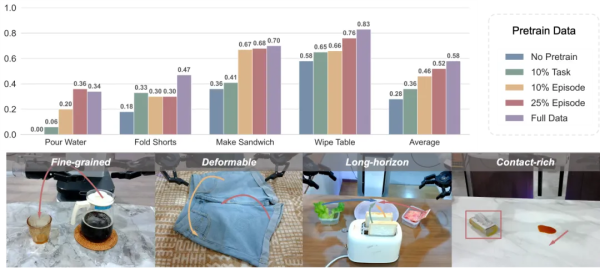

After confirming the value of diversity, the team explored another question: does increasing data volume still enhance performance once task diversity is achieved? Results showed a consistent upward trend. The GO-1 model’s average score rose steadily with more pre-training data, following a strict scaling law.

By fitting a power-law curve Y=1.24X−0.08Y = 1.24X^{-0.08}Y=1.24X−0.08, researchers discovered a predictable relationship between performance and data size, with a strong correlation coefficient of -0.99.

This finding marks a major step in embodied intelligence. Previous scaling studies focused on single-task or small models without pre-training. This study extends scaling law research to multi-task pre-training, proving that diverse, large-scale data leads to continuous and measurable performance growth.

Single vs. Multi-Embodiment Training

Traditionally, experts believed that to generalize across robot platforms, pre-training must include many robot embodiments. Datasets like Open X-Embodiment (OXE) followed this idea, covering 22 robots. But such diversity also adds complexity because each robot differs in structure and movement space.

The research team questioned this assumption. They observed that while robots vary in form, their end-effector actions can often follow identical world-coordinate trajectories. This insight led to a bold hypothesis: high-quality single-embodiment pre-training might transfer knowledge as effectively as multi-embodiment data.

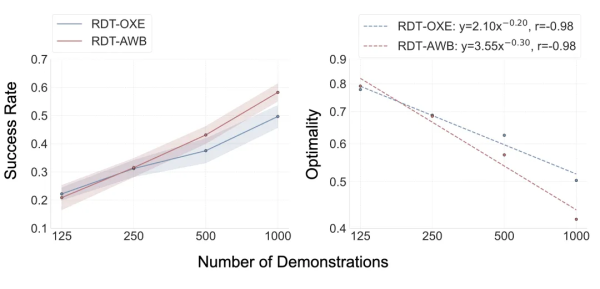

To test it, they compared two models:

- RDT-AWB – trained on 1 million Agibot World trajectories (single robot).

- RDT-OXE – trained on 2.4 million OXE trajectories (22 robots).

Tests on Franka, Arx, and Piper platforms revealed surprising results. RDT-OXE initially had an edge, but RDT-AWB soon caught up and then surpassed it beyond 250 samples per task. The single-embodiment model even showed better scaling efficiency, following a power-law growth curve.

In real-world Agilex tests, RDT-AWB outperformed RDT-OXE on three of four tasks, proving that quality data from one robot can outperform mixed data from many.

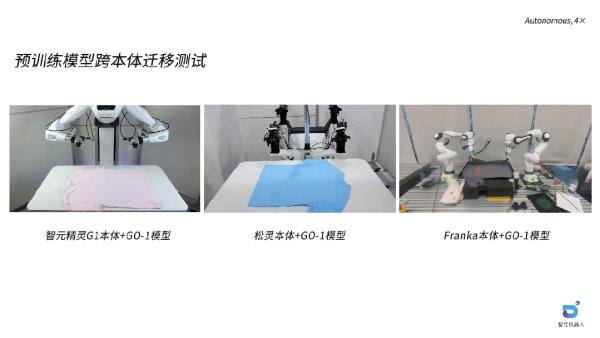

Further trials with the GO-1 model confirmed the finding. Pre-trained only on Agibot World data, GO-1 adapted to new platforms like Lingong and Franka using just 200 samples, achieving 30% better scores than models trained from scratch.

These results overturn the belief that multi-embodiment data is essential. Single-robot pre-training can simplify pipelines, cut data costs, and still deliver superior cross-platform transfer.

Understanding Expert Diversity

Expert diversity—differences in operator behavior—also affects learning. Unlike standardized datasets in NLP or computer vision, robot data depends heavily on human demonstration styles.

rnet, robot datasets consist of continuous robot motions highly sensitive to operator behavior.

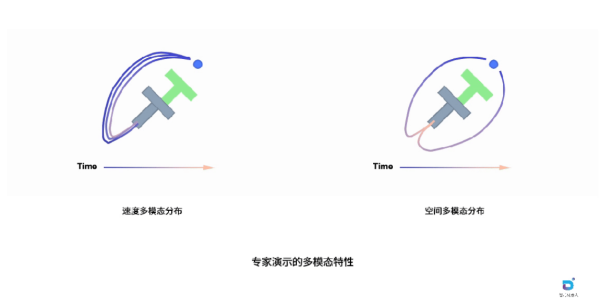

In the PushT task, for example, operators varied in how they pushed a T-shaped object toward a target. Some approached from the left or right, creating distinct spatial paths (spatial diversity). Others differed in speed (velocity diversity). Spatial differences provide valuable learning signals, but velocity variations often add noise, making learning harder without improving results.

Debiasing for Efficient Learning

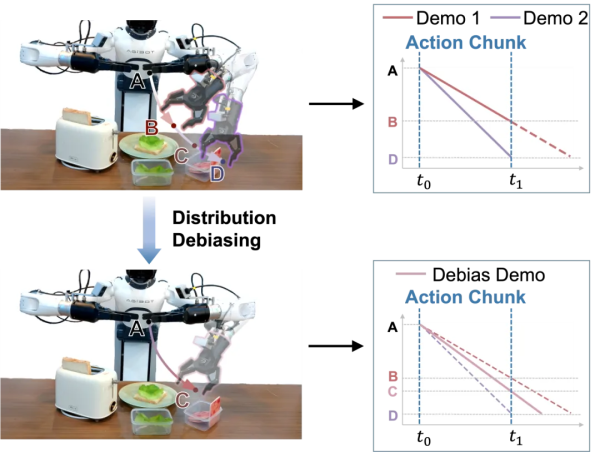

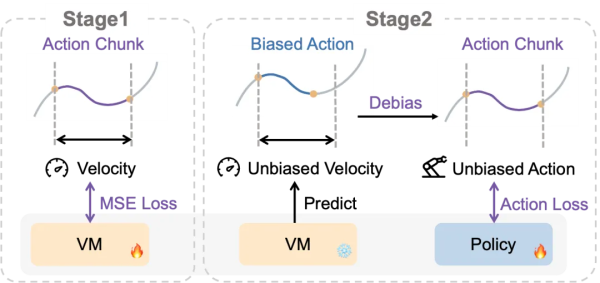

To fix this, the team developed a two-stage distribution debiasing framework using a Velocity Model (VM).

- Stage One: The VM learns the normal speed distribution for each action chunk.

- Stage Two: During training, it predicts unbiased speeds and adjusts actions accordingly.

This process removes unhelpful velocity noise and helps the policy model focus on meaningful strategies.

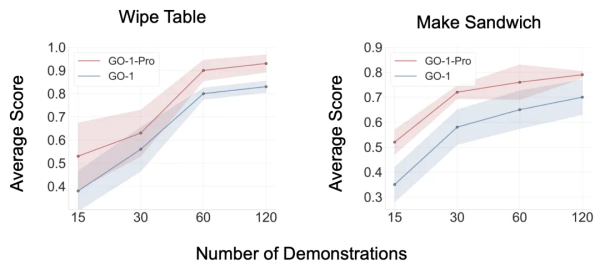

The improved model, GO-1-Pro, outperformed the standard GO-1 on both Make Sandwich and Wipe Table tasks at all data scales. It also achieved similar results with only half the data, doubling efficiency.

In low-data conditions, the advantage was dramatic—48% improvement on Make Sandwich and 39% on Wipe Table. By filtering out velocity noise, the model focused better on spatial patterns, improving accuracy and data efficiency even with limited samples.

Redefining Data Diversity

This research redefines data scaling for robot manipulation. It offers three insights:

- Task diversity matters more than large volumes of single-task data.

Embodiment diversity isn’t always required for cross-platform transfer.

- Expert diversity, if unfiltered, can harm performance due to velocity noise.

The study proves that quality beats quantity. True progress comes from understanding what diversity helps, what hurts, and how to use data intelligently rather than collecting endlessly. It sets a new direction for more efficient and precise robot learning.

Company Details

Organization: Shanghai Zhiyuan Innovation Technology Co., Ltd.

Contact Person: Jocelyn Lee

Website: https://www.zhiyuan-robot.com

Email: Send Email

City: Shanghai

Country: China

Release Id: 17102535602